Why Flowent is the Most Reliable and Lowest LLM Inference Cost Conversational AI Platform in 2025

Real conversations, real token consumption, real results: The definitive LLM performance comparison

When evaluating conversational AI platforms, enterprise teams face a critical question: Can you have both intelligent conversations AND LLM cost efficiency? Rasa forces you to choose. Premium models deliver better conversations but consume more tokens and API credits. Budget options save on inference costs but frustrate users with rigid, brittle interactions.

Flowent breaks this tradeoff.

Through real-world testing with identical use cases, Flowent consistently delivers superior conversation quality while cutting LLM inference costs by 35–47% — making it up to 1.9x more cost efficient than Rasa. Here's the token consumption data that proves it.

The Test: Real Conversations, Real Token Consumption

We tested both platforms with identical scenarios using two different LLM models (Qwen and GPT-4o-mini) to demonstrate consistent token efficiency advantages across different AI backends.

Test Scenario: Travel Booking Assistant

This test uses the exact same excursion booking scenario from Rasa's CALM showcase example in their official GitHub repository. We implemented this identical use case in Flowent to provide a direct, fair comparison.

- User Goal: Book an excursion

- Complexity: Handle ambiguous inputs, provide recommendations, complete booking

- Models Tested: Qwen 2.5 72B Instruct Turbo, GPT-4o-mini

Test 1: Qwen 2.5 Model Comparison

Rasa Performance

💬 User: hi

🤖 Bot: Hello! how can I help you today?

💬 User: idk

🤖 Bot: It's okay! If you're not sure, we can just chat. How was your day?

💬 User: how can you help me

🤖 Bot: I can list your flight bookings or change them. I can also book rental cars, hotels, and excursions! If you need something else, please email us help@example.com.

💬 User: I wanna go on a trip

🤖 Bot: Hello! how can I help you today?

💬 User: I wanna go on a excursion

🤖 Bot: in which city?

💬 User: basel

🤖 Bot: basel has some wonderful attractions! Here are a few popular options:

* Basel Minster

* Kunstmuseum Basel

* Basel Zoo

💬 User: Basel Zoo

🤖 Bot: You've chosen Basel Zoo. Shall I book that for you?

💬 User: yes

🤖 Bot: Wonderful. I've booked your visit to Basel Zoo

Issues Identified:

- ❌ Bot didn't show available services upfront - user had to ask "how can you help me"

- ❌ Bot restarted conversation mid-flow ("Hello! how can I help you today?" repeated)

- ❌ Generic, unfocused responses to ambiguous input

LLM Token Analysis (Qwen):

- Input/Output Tokens: 10,831

- LLM Inference Cost: $0.009747

- Projected LLM Costs (1M conversations): $9,747

Flowent Performance

💬 User: hi

🤖 Flowent: Hello! How can I assist you today? Here are the services I can help with:

- Book Excursion: search and book an excursion

- Search Hotel: Search for hotels

- Search Rental Car: Search for rental cars

Which service would you like to use?

💬 User: Excursion

🤖 Flowent: Great choice! To help you find the perfect excursion, I need to know the city where you plan to go. Could you please provide the city name?

💬 User: Paris

🤖 Flowent: Here are some excursion recommendations for Paris:

- Eiffel Tower Evening Tour

- Louvre Museum Private Visit

- Seine River Cruise

- Montmartre Walking Tour

- Versailles Palace Day Trip

I hope you find these options interesting!

🤖 Flowent: Please provide the name of the excursion you want to book.

💬 User: Eiffel Tower

🤖 Flowent: Your visit to Eiffel Tower Evening Tour in Paris has been successfully booked. Enjoy your excursion!

Performance Advantages:

- ✅ Clear, structured service menu from start

- ✅ Consistent conversation flow without restarts

LLM Token Analysis (Qwen):

- Input/Output Tokens: 5,998

- LLM Inference Cost: $0.005398

- Projected LLM Costs (1M conversations): $5,398

Qwen Model Results Summary

| Metric | Rasa | Flowent | Flowent Advantage |

|---|---|---|---|

| Token Consumption | 10,831 | 5,998 | 45% fewer tokens |

| LLM Inference Cost | $0.009747 | $0.005398 | 45% cost reduction |

| 1M Conversations | $9,747 | $5,398 | $4,349 savings |

| Conversation Quality | Poor (restarts, errors) | Excellent (smooth flow) | Superior UX |

Test 2: GPT-4o-mini Model Comparison

Rasa Performance

💬 User: hi

🤖 Bot: Hello! how can I help you today?

💬 User: I don't know

🤖 Bot: I can list your flight bookings or change them. I can also book rental cars, hotels, and excursions! If you need something else, please email us help@example.com.

💬 User: excursion

🤖 Bot: in which city?

💬 User: basel

🤖 Bot: basel has some wonderful attractions! Here are a few popular options:

* Basel Minster

* Kunstmuseum Basel

* Basel Zoo

💬 User: Basel Zoo

🤖 Bot: You've chosen Basel Zoo. Shall I book that for you?

💬 User: yes

🤖 Bot: Wonderful. I've booked your visit to Basel Zoo

Issues Identified:

- ❌ Bot didn't show available services upfront - user had to say "I don't know" to get service options

LLM Token Analysis (GPT-4o-mini):

- Input/Output Tokens: 7,697

- LLM Inference Cost: $0.001185

- Projected LLM Costs (1M conversations): $1,185

Flowent Performance

💬 User: hi

🤖 Flowent: Hello! I can assist you with the following services:

1. Book Excursion - search and book an excursion

2. Search Hotel - search for hotels

3. Search Rental Car - search for rental cars

Which service do you need help with?

💬 User: Excursion

🤖 Flowent: Please provide the city for your excursion.

💬 User: paris

🤖 Flowent: Here are some excursion recommendations for Paris:

- Eiffel Tower Evening Tour

- Louvre Museum Private Visit

- Seine River Cruise

- Montmartre Walking Tour

- Versailles Palace Day Trip

🤖 Flowent: Please provide the name of the excursion you want to book.

💬 User: Eiffel Tower

🤖 Flowent: Your visit to Eiffel Tower in paris has been successfully booked. Enjoy your excursion!

Performance Advantages:

- ✅ Immediately presents clear options

- ✅ Complete booking confirmation

LLM Token Analysis (GPT-4o-mini):

- Input/Output Tokens: 4,313

- LLM Inference Cost: $0.000731

- Projected LLM Costs (1M conversations): $731

GPT-4o-mini Model Results Summary

| Metric | Rasa | Flowent | Flowent Advantage |

|---|---|---|---|

| Token Consumption | 7,697 | 4,313 | 44% fewer tokens |

| LLM Inference Cost | $0.001185 | $0.000731 | 38% cost reduction |

| 1M Conversations | $1,185 | $731 | $454 savings |

| Conversation Quality | Adequate but rigid | Excellent flow | Superior UX |

Enterprise LLM Cost Impact

Annual LLM Inference Costs (10M Conversations)

| Platform Type | Qwen Model | GPT-4o-mini |

|---|---|---|

| Rasa | $97,470 | $11,850 |

| Flowent | $53,980 | $7,310 |

| Annual LLM Savings | $43,490 | $4,540 |

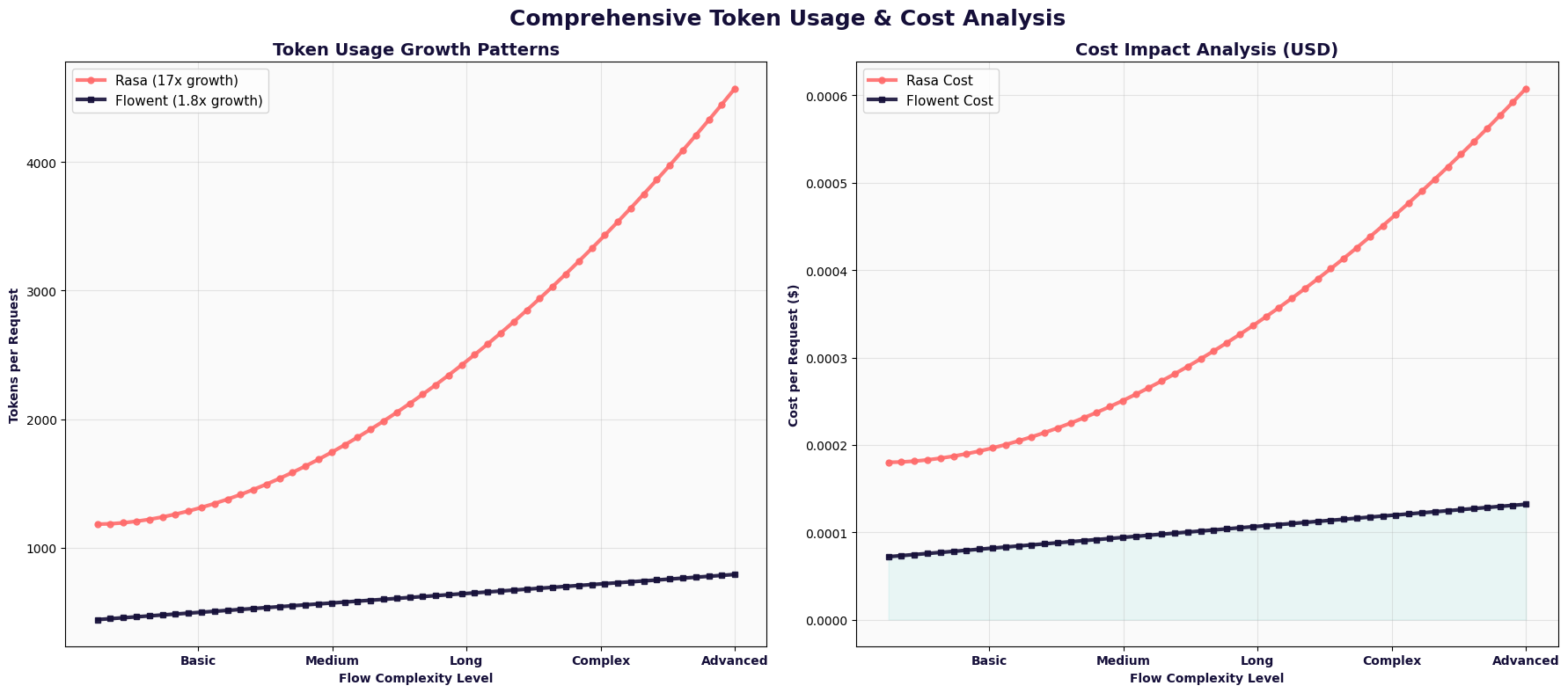

Scaling Behavior: Complex Flows

As conversation flows become more complex, Flowent maintains its efficiency advantage. The chart below demonstrates how Rasa experiences significant token growth (3.9x increase from 1,183 to 4,572 tokens per request), while Flowent's optimized architecture delivers controlled linear scaling with only 1.8x token growth (441 to 792 tokens per request). This dramatic difference means enterprises can deploy sophisticated conversation flows without facing the cost explosion typical of competing platforms.

Cost Impact with GPT-4o-mini:

- Rasa: $0.000180 → $0.000608 per request (3.4x cost increase)

- Flowent: $0.000072 → $0.000132 per request (1.8x cost increase)

Comprehensive analysis showing Flowent's linear growth vs Rasa's exponential scaling

Comprehensive analysis showing Flowent's linear growth vs Rasa's exponential scaling

Beyond LLM Costs: The Token Efficiency Advantage

Developer Experience

| Development Aspect | Rasa | Flowent |

|---|---|---|

| Training Data Required | Thousands of examples | None |

| Maintenance Effort | High | Minimal |

| Debugging Complexity | Very High | Low |

| Business Logic Changes | YAML + Retrain | YAML Only |

The Strategic Decision

Flowent's LLM efficiency advantages compound over time:

Immediate Benefits:

- Up to 1.9x more cost efficient compared to Rasa

- Superior conversation quality through optimized prompting

- Faster deployment without prompt engineering overhead

Long-term Value:

- No token consumption increases from model retraining

- Simplified prompt management and updates

- Scalable LLM architecture without token waste

Risk Mitigation:

- Deterministic, debuggable conversation logic

- No model drift increasing token consumption

- Predictable LLM cost scaling

Why Flowent Dominates Every Benchmark

This article shows token efficiency, but Flowent's advantages extend across all metrics:

Key Performance Areas:

- Token Efficiency: 35–47% fewer tokens than traditional platforms — making Flowent up to 1.9x more cost efficient

- Infrastructure Scale: Single server handles 20,000 concurrent users

- Architecture: LLM-powered flows vs. fragile intent classification

🏆 Flowent's Complete Performance Advantage

| 🎯 Benchmark Category | ⚡ Flowent Advantage | 📖 Deep Dive Article |

|---|---|---|

| 🔥 Token Consumption | up to 1.9x more cost efficient demonstrated here | This article |

| ⚙️ Infrastructure Scaling | 20,000 concurrent users on 1 server | Chat Infrastructure Scalability |

| 🧠 Conversation Architecture | More intelligent, no training data, adaptive flows | LLM Declarative Flows Future |

🎯 Result: Superior performance across cost, scalability, maintainability, and user experience.

Ready for LLM Cost-Effective Intelligence?

The token consumption data speaks for itself: Flowent delivers both superior conversation quality AND significant LLM cost savings. Whether you're processing thousands or millions of conversations, the token efficiency and cost advantages are clear.

See the difference in action.

Want to validate these results in your environment? Schedule a technical demonstration and see Flowent's performance with your specific use cases.

Experience the perfect balance of intelligence and efficiency—contact Janan Tech today.